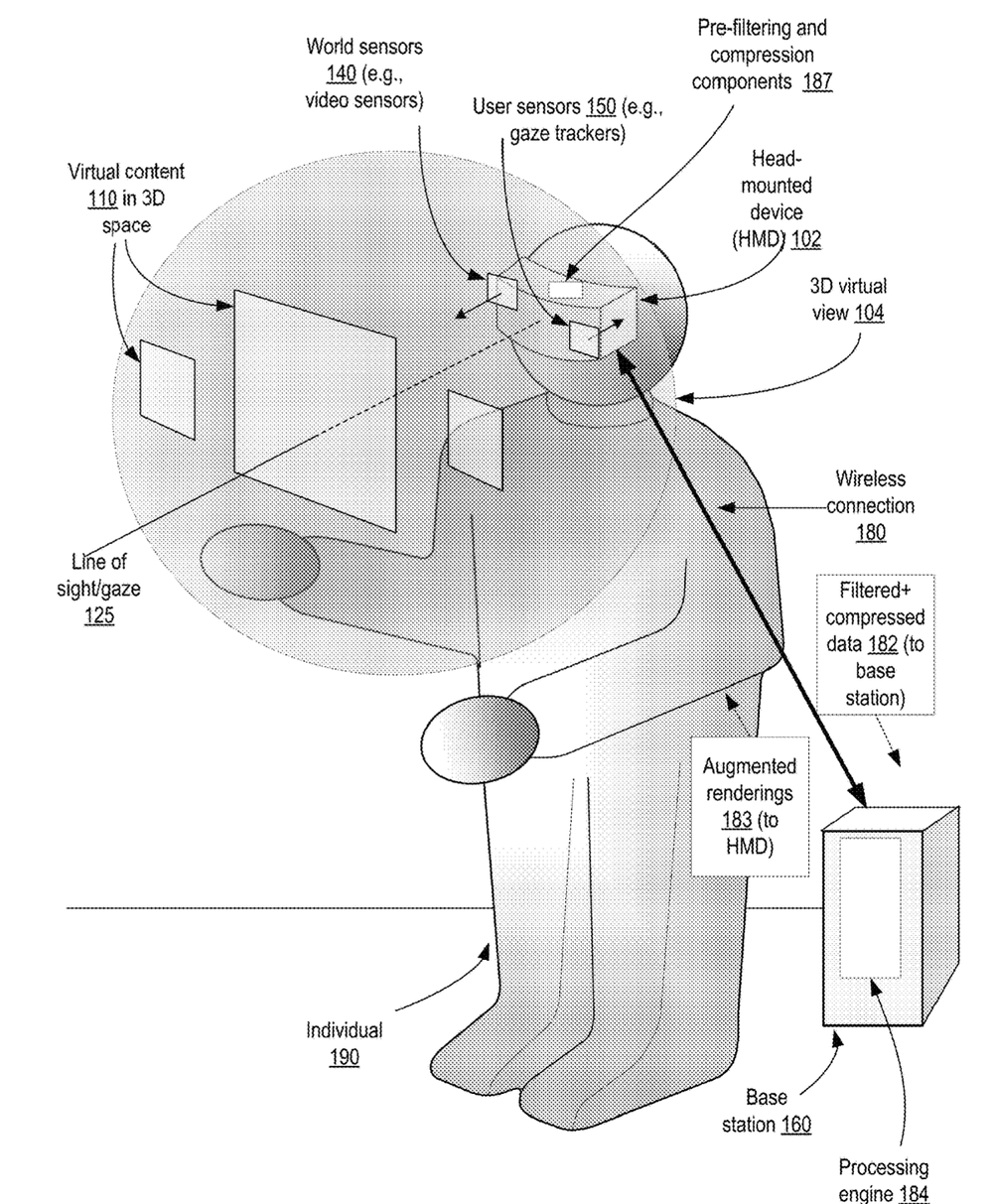

Apple has been granted a patent (number 10,861,142) involving “gaze detection” for the rumored “Apple Glasses,” an augmented reality/virtual reality/mixed head-mounted display (HMD).

In the patent data, the tech giant notes that the technology for capturing video has improved and become less expensive, more and more applications with video components are becoming become popular. For example, mixed reality applications (applications in which real-world physical objects or views may be augmented with virtual objects or relevant supplementary information) and/or virtual reality applications (applications in which users may traverse virtual environments), in both of which video data may be captured and manipulated, are an increasing focus of development and commercialization.

For at least some applications, video data representing the environment may be processed at a device other than the video capture device itself; that is, video data may have to be transmitted over a network path (such as a wireless link) which may have relatively low bandwidth capacity relative to the rate at which raw video data is captured. Apple says that, depending on the video fidelity needs of the application, “managing the flow of video data over constrained network pathways while maintaining high levels of user satisfaction with the application may present a non-trivial technical challenge.” The tech giant wants to change this.

Here’s the summary of the invention: “A multi-layer low-pass filter is used to filter a first frame of video data representing at least a portion of an environment of an individual. A first layer of the filter has a first filtering resolution setting for a first subset of the first frame, while a second layer of the filter has a second filtering resolution setting for a second subset. The first subset includes a data element positioned along a direction of a gaze of the individual, and the second subset of the frame surrounds the first subset. A result of the filtering is compressed and transmitted via a network to a video processing engine configured to generate a modified visual representation of the environment.”