Apple has filed for a patent (number 20200043237) for a “media composer for computer-generated reality” that would allow iPhones and iPads to “film” computer generated reality images, overlaying objects on the devices to real world scenes.

The idea relates to three dimensional (3D) content, and in particular, to systems, methods, and devices for recording or streaming computer generated reality (CGR) content. In the patent filing, Apple says that existing computing systems and apps don’t “adequately” facilitate the recording or streaming of CGR content.

The tech giant wants users to be able to interact with audio objects that create 3D or spatial audio environment that provides the perception of point audio sources in 3D space.

Examples of CGR include virtual reality and mixed reality. A virtual reality (VR) environment refers to a simulated environment that is designed to be based entirely on computer-generated sensory inputs for one or more senses. A VR environment comprises virtual objects with which a person may sense and/or interact. For example, computer-generated imagery of trees, buildings, and avatars representing people are examples of virtual objects. A person may sense and/or interact with virtual objects in the VR environment through a simulation of the person’s presence within the computer-generated environment, and/or through a simulation of a subset of the person’s physical movements within the computer-generated environment.

In contrast to a VR environment, which is designed to be based entirely on computer-generated sensory inputs, a mixed reality (MR) environment refers to a simulated environment that is designed to incorporate sensory inputs from the physical environment, or a representation thereof, in addition to including computer-generated sensory inputs (e.g., virtual objects). On a virtuality continuum, a mixed reality environment is anywhere between, but not including, a wholly physical environment at one end and virtual reality environment at the other end.

In some MR environments, computer-generated sensory inputs may respond to changes in sensory inputs from the physical environment. Also, some electronic systems for presenting an MR environment may track location and/or orientation with respect to the physical environment to enable virtual objects to interact with real objects (that is, physical articles from the physical environment or representations thereof). For example, a system may account for movements so that a virtual tree appears stationery with respect to the physical ground.

Examples of mixed realities include augmented reality and augmented virtuality. An augmented reality (AR) environment refers to a simulated environment in which one or more virtual objects are superimposed over a physical environment, or a representation thereof. For example, an electronic system for presenting an AR environment may have a transparent or translucent display through which a person may directly view the physical environment.

The system may be configured to present virtual objects on the transparent or translucent display, so that a person, using the system, perceives the virtual objects superimposed over the physical environment. As used herein, an HMD in which at least some light of the physical environment may pass through a transparent or translucent display is called an “optical see through” HMD.

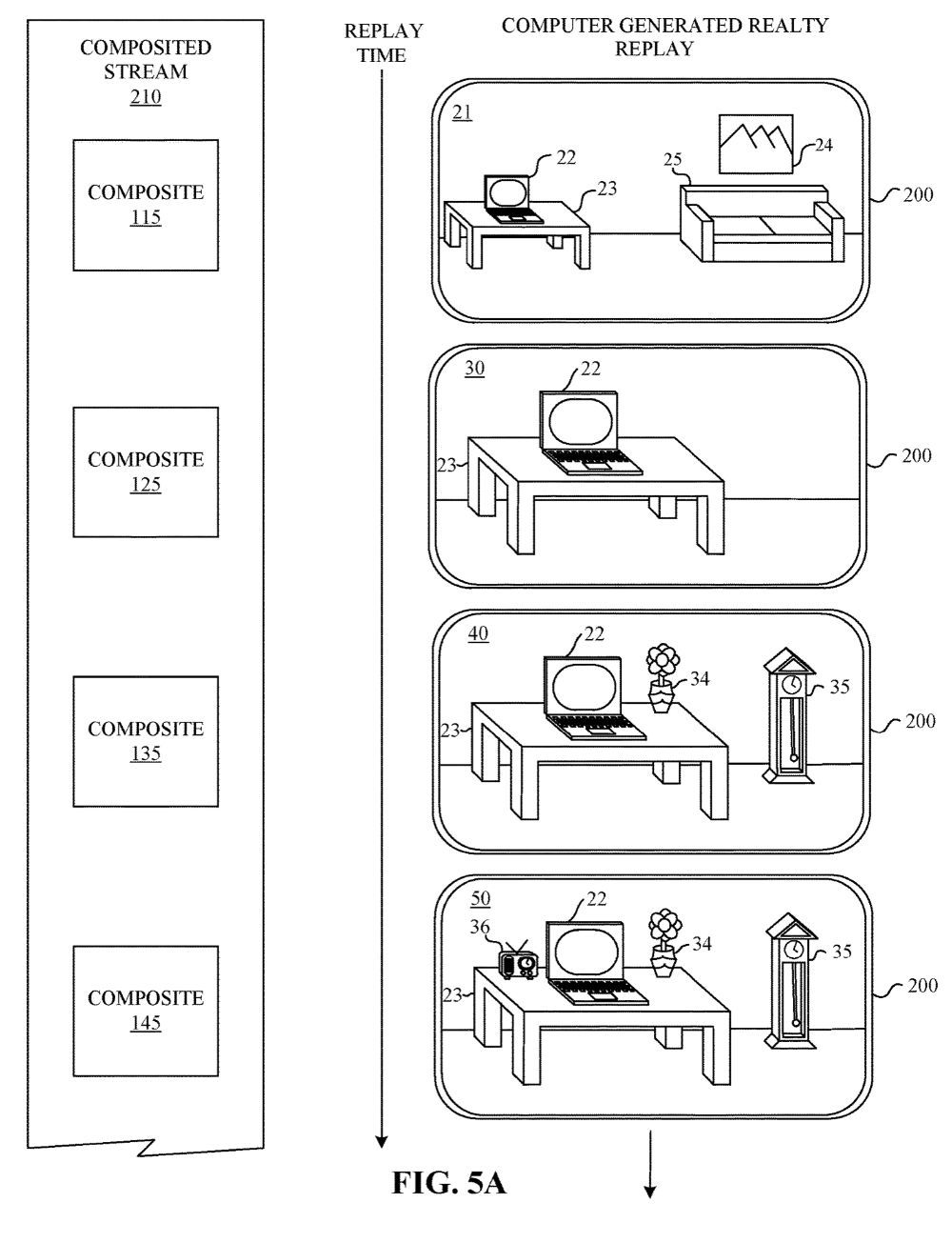

Here’s Apple’s summary of the invention: “One implementation forms a composited stream of computer-generated reality (CGR) content using multiple data streams related to a CGR experience to facilitate recording or streaming. A media compositor obtains a first data stream of rendered frames and a second data stream of additional data. The rendered frame content (e.g., 3D models) represents real and virtual content rendered during a CGR experience at a plurality of instants in time.

“The additional data of the second data stream relates to the CGR experience, for example, relating to audio, audio sources, metadata identifying detected attributes of the CGR experience, image data, data from other devices involved in the CGR experience, etc. The media compositor forms a composited stream that aligns the rendered frame content with the additional data for the plurality of instants in time, for example, by forming time-stamped, n-dimensional datasets (e.g., images) corresponding to individual instants in time.”