A new Apple patent filing (number 20200236489) for “predictive head-tracked binaural audio recording” hints at even better audio for upcoming AirPods, AirPods Pro, or the rumored “Apple Studio” over-the-ear headset. If realized, the earbuds and/or headset could adjust incoming audio based on a user’s head movements.

This could come in handy when viewing augmented reality or virtual reality scenes on an iPad, iPhone, or Mac. Presumably, the rumored “Apple Glasses” will have built-in audio.

In the patent filing Apple notes that virtual reality (VR) allows users to experience and/or interact with an immersive artificial environment, such that the user feels as if they were physically in that environment. For example, virtual reality systems may display stereoscopic scenes to users in order to create an illusion of depth, and a computer may adjust the scene content in real-time to provide the illusion of the user moving within the scene.

When the user views images through a virtual reality system, the user may feel as if they are moving within the scenes from a first-person point of view. Similarly, mixed reality (MR) combines computer generated information (referred to as virtual content) with real world images or a real world view to augment, or add content to, a user’s view of the world, or alternatively combines virtual representations of real world objects with views of a three-dimensional (3D) virtual world. Head-tracked binaural audio would contribute to the experience

The simulated environments of virtual reality and/or the mixed environments of mixed reality may thus be utilized to provide an interactive user experience for multiple applications.

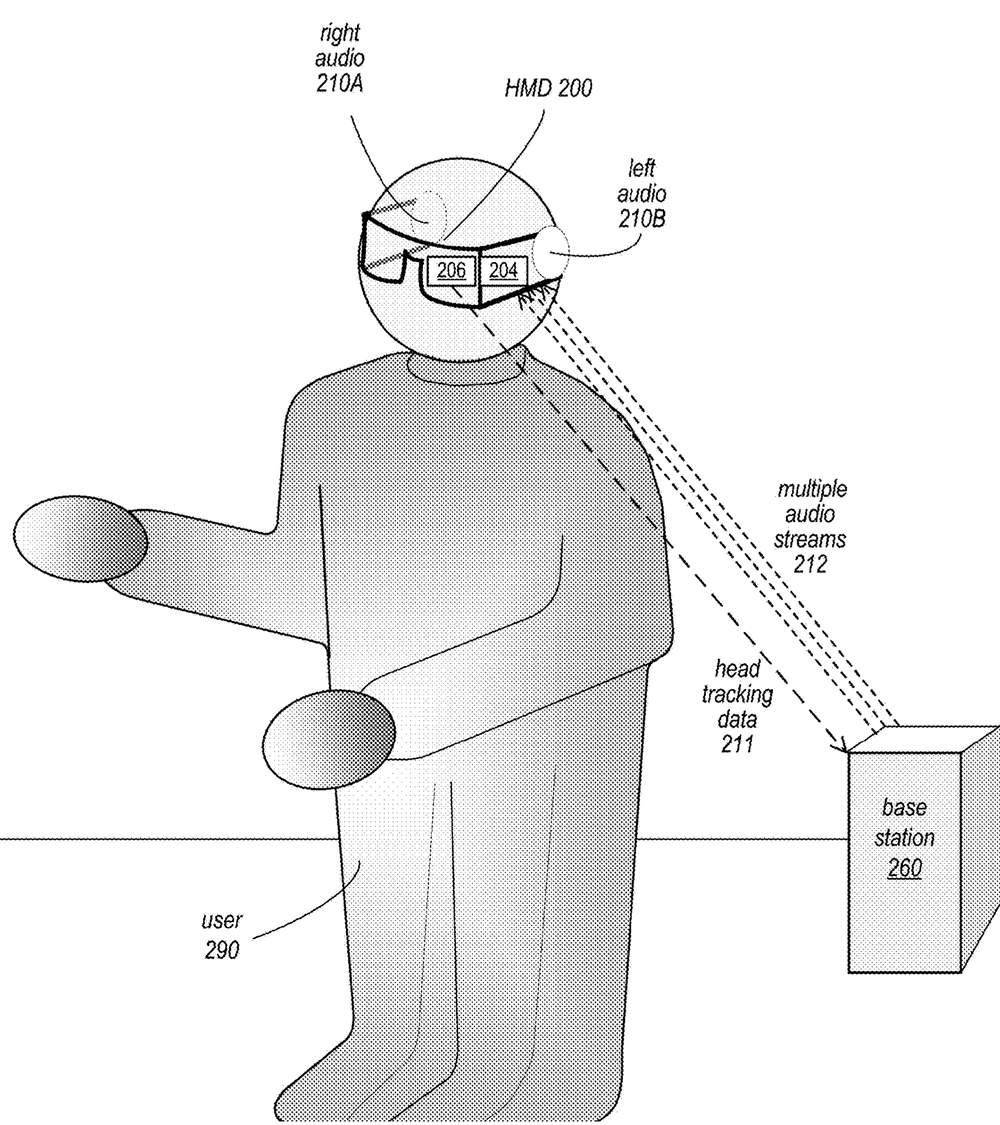

Here’s the summary of the invention: “Methods and apparatus for predictive head-tracked binaural audio rendering in which a rendering device renders multiple audio streams for different possible head locations based on head tracking data received from a headset, for example audio streams for the last known location and one or more predicted or possible locations, and transmits the multiple audio streams to the headset.

“The headset then selects and plays one of the audio streams that is closest to the actual head location based on current head tracking data. If none of the audio streams closely match the actual head location, two closest audio streams may be mixed. Transmitting multiple audio streams to the headset and selecting or mixing an audio stream on the headset may mitigate or eliminate perceived head tracking latency.”