A newly granted Apple patent (number 10,572,072) for “depth-based touch detection” shows the company still has big plans for ARKit, the rumored “Apple Glasses,” and augmented reality/virtual reality on its iOS, iPadOS, and perhaps macOS devices.

ARKit allows developers to tap into the latest computer vision technologies to build detailed virtual content on top of real-world scenes for interactive gaming, immersive shopping experiences, industrial design and more.

As for Apple Glasses, such a device will arrive this year, next year, or 2021, depending on which rumor you believe. It may or may not have to be tethered to an iPhone to work. Other rumors say that Apple Glasses could have a custom-build Apple chip and a dedicated operating system dubbed “rOS” for “reality operating system.”

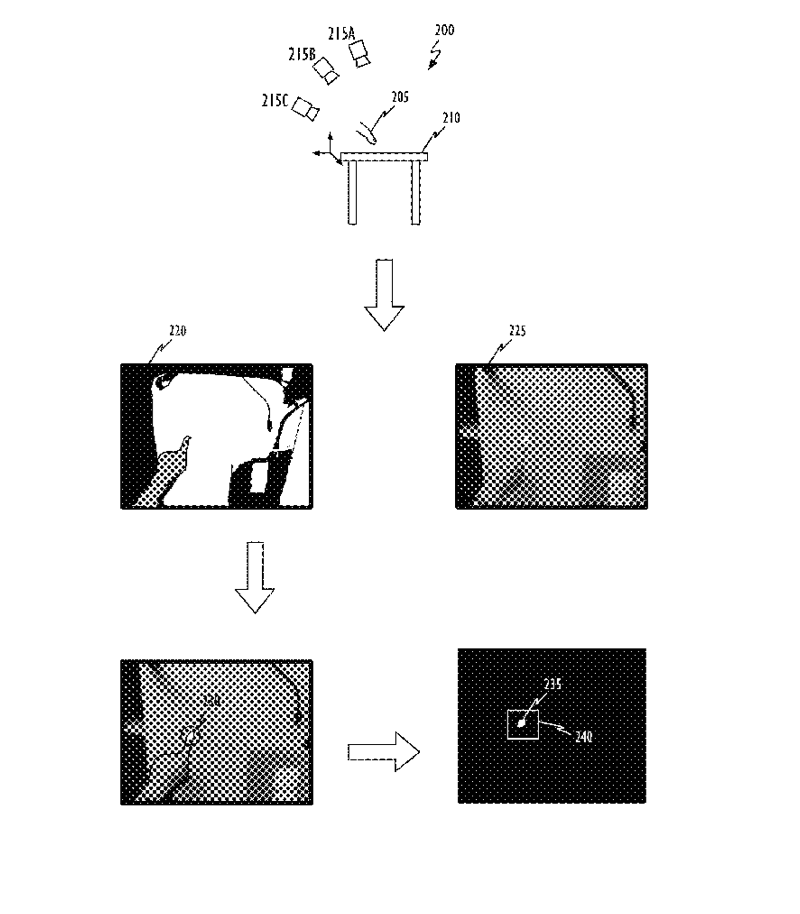

In the patent info, Apple says that detecting when and where a user’s finger touches a real environmental surface can enable intuitive interactions between the user, the environment, and a hardware system (e.g., a computer or gaming system). Using cameras for touch detection has many advantages over methods that rely on sensors embedded in a surface (e.g., capacitive sensor).

What’s more, some modern digital devices like head-mounted devices (HMD) and smart phones are equipped with vision sensors–including depth cameras. Current depth-based touch detection approaches use depth cameras to provide distance measurements between the camera and the finger and between the camera and the environmental surface.

One approach requires a fixed depth camera setup and cannot be applied to dynamic scenes. Another approach first identifies the finger, segments the finger, and then flood fills neighboring pixels from the center of the fingertip so that when sufficient pixels are so filled, a touch is detected.

Apple says that, however, because this approach does not account even consider normalizing pixel depth-data, it can be” quite error prone.”Apple thinks it can do better

Here’s a summary of the patent: “Systems, methods, and computer readable media to improve the operation of detecting contact between a finger or other object and a surface are described. In general, techniques disclosed herein utilize a depth map to identify an object and a surface, and a classifier to determine when the object is touching the surface

“A measure of the object’s ‘distance’ is made relative to the surface and not the camera(s) thereby providing some measure of invariance with respect to camera pose. The object-surface distance measure can be used to construct an identifier or ‘feature vector’ that, when applied to a classifier, generates an output indicative of whether the object is touching the surface. The classifier may be based on machine learning and can be trained off-line before run-time operations are commenced. In some embodiments, temporal filtering may be used to improve surface detection operations.”