It’s that time of year again – another year over and a new one just around the corner! Christmas and several Apple keynotes have all come and gone, and we are now into the 12 Days of Christmas.

This is the first post in our annual 12-part series covering the accessibility features we would like to see Apple bring to its products in the coming year. To read last year’s requests, just click or tap this link.

This series is being put together by Accessibility Editor Alex Jurgensen, with the help of several contributors.

For the first request of Christmas, we ask Apple to give to us:

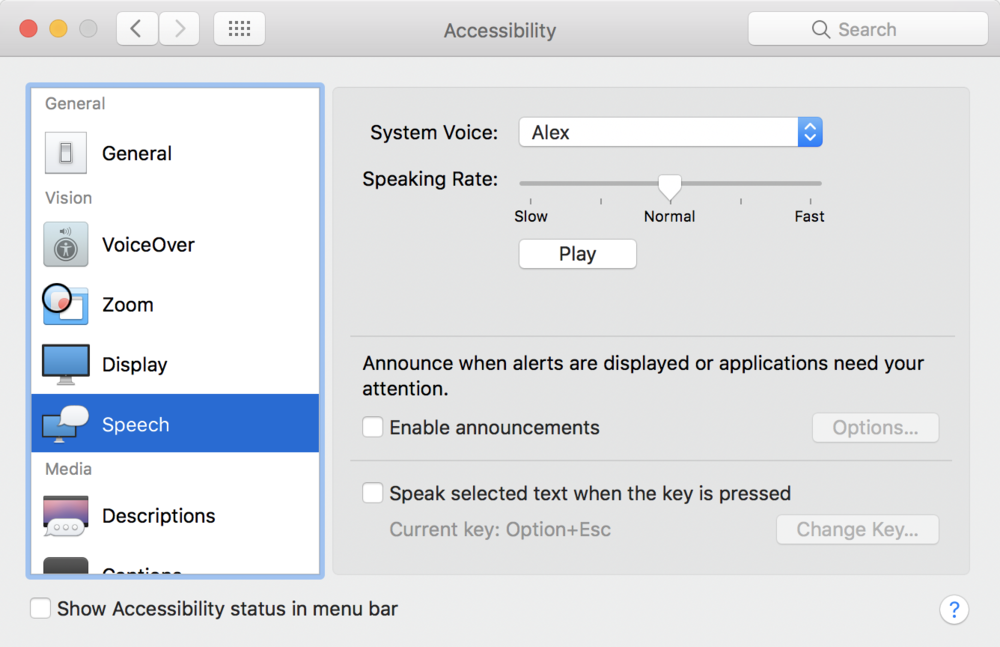

1. A New Speech Manager for macOS

To anyone who has used VoiceOver on macOS for any length of time, the frustrations associated with sometimes laggy speech and cross-talk, where VoiceOver will talk over itself, are all too familiar. In contrast, speech on iOS is relatively smooth and seamless. One reason for this glaring difference is Apple’s legacy speech manager on macOS. The speech manager is software that runs in the background, handling the conversion of text into synthetic speech and outputting it to the user’s output device.

Apple’s speech manager on macOS employs many software design concepts that the company itself discourages and often outright prevents third-party developers from using. For example, the speech manager loads all synthesizers (code needed to generate speech using a specific set of voices) installed on the system as directly as plugins rather than having the synthesizers run as separate processes and then communicating with them via the more modern mechanism of interprocess communication (IPC). This is why a buggy synthesizer installed on the system can affect system-wide speech output.

In contrast to speech manager, many apps use IPC to communicate between components. Safari runs each webpage in its own process and uses IPC to send commands such as “back” and “forward” to it. This is why a website that crashes does not take down the entire browser.

There are also important security benefits gained by using IPC, but I won’t go into those here.

Aside from speech manager’s antiquated way of handling communication with synthesizers, it also lacks the proper queueing support for speech messages found in many similar products. As a result, cross-talk occurs.

Finally, iOS’s speech system can automatically detect the language of text being received to be spoken and select the appropriate language. This is an important feature for users who frequently read text in various languages.

For the above reasons, it is clear that macOS’s speech manager is getting long in the tooth and puts the effectiveness of VoiceOver at risk. Therefore, we ask Apple to take the time and either port the AVSpeechSynthesis framework used by its other operating systems or otherwise rewrite the desktop speech manager. Likely a combined approach will be needed to ensure the unique needs of the desktop are addressed.

A new speech system on macOS may also fix other long-standing bugs within VoiceOver not listed above, including some that may seem unrelated to speech at first glance. However, this is only speculation on our part. Either way, a new speech system will address a very real set of system-wide bugs and strengthen VoiceOver, Siri, and other components of macOS that rely on speech manager.

The Christmas season is upon us and the spirit of giving is in the air. Writing articles for the Accessible Apple column takes time and energy. If you enjoy Accessible Apple articles and would like to see them continue into 2017, please consider making a donation to Camp Bowen, a summer camp for the visually impaired and blind that promotes independent living, education, and the fostering of creativity. A few dollars can go a long way towards ensuring this valuable program can continue to serve generations to come.